On a number of Drupal sites that I am involved with recently I see the error message when either trying to dump or restore certain databases:

ERROR 2020 (HY000) at line 1: Got packet bigger than 'max_allowed_packet'It is important to note that with MySQL there are 2 settings for max_allowed_packet, the client (what you are connecting from), and the server (what you are connecting to).

In order to find out the client's current settings, you can run:

$ mysql --help | grep max-allowed-packet | grep -v '#' | awk '{print $2/(1024*1024)}'

16So 16MB on the client side.

In order to find out the server's current setting, you can run:

SHOW VARIABLES LIKE 'max_allowed_packet';

+--------------------+----------+

| Variable_name | Value |

+--------------------+----------+

| max_allowed_packet | 67108864 |

+--------------------+----------+So 64MB on the server side.

So at the moment:

- The client cannot send or receive more than

16MBin a single statement - The server cannot send or receive more than

64MBin a single statement

So you can end up in a position where there is content in your database, that is working fine, but you cannot dump the database, nor can you restore from a dump (with your current client configuration).

Take the search_api_attachments module in Drupal, it uses the key_value table in Drupal 8 to store extracted text from documents.

SELECT name, length(value) as size FROM key_value ORDER BY size DESC limit 5;

+------------------------------+----------+

| name | size |

+------------------------------+----------+

| search_api_attachments:14646 | 33623803 |

| search_api_attachments:14288 | 3394023 |

| search_api_attachments:13146 | 2356958 |

| search_api_attachments:4921 | 1554830 |

| search_api_attachments:1586 | 1549981 |

+------------------------------+----------+key_value tableSolution 1 - alter max_allowed_packet

The first solution is simply to alter the max_allowed_packet size to accommodate the size needed (on both the client and server). The only issue is that this is a game of cat and mouse. As soon as you tune the size to be larger, a content editor will upload a larger document.

It also means your database size will grow fairly unchecked, especially if you have heavy editorial where documents are commonly uploaded.

While I do advocate for sensible defaults, I think having > 64MB in a single cell in a table as potentially overkill for the benefits it provides.

Solution 2 - reduce the amount in the database

The point of indexing attachments is to ensure that you can still find content that is buried in binary files. I would argue that most of the important keywords of these documents will be in the first few pages. Indexing the entire document often will provide limited added value (if any).

Reading through the code of the search_api_attachments module, I spot these useful lines:

/**

* Limit the indexed text to first N bytes.

*

* @param string $extracted_text

* The hole extracted text.

*

* @return string

* The first N bytes of the extracted text that will be indexed and cached.

*/

public function limitBytes($extracted_text) {

$bytes = 0;

if (isset($this->configuration['number_first_bytes'])) {

$bytes = Bytes::toInt($this->configuration['number_first_bytes']);

}

// If $bytes is 0 return all items.

if ($bytes == 0) {

return $extracted_text;

}

else {

$extracted_text = mb_strcut($extracted_text, 0, $bytes);

}

return $extracted_text;

}So it turns out, baked into the module, is a way to effectively limit the number of characters stored in the database (drupal.org issue).

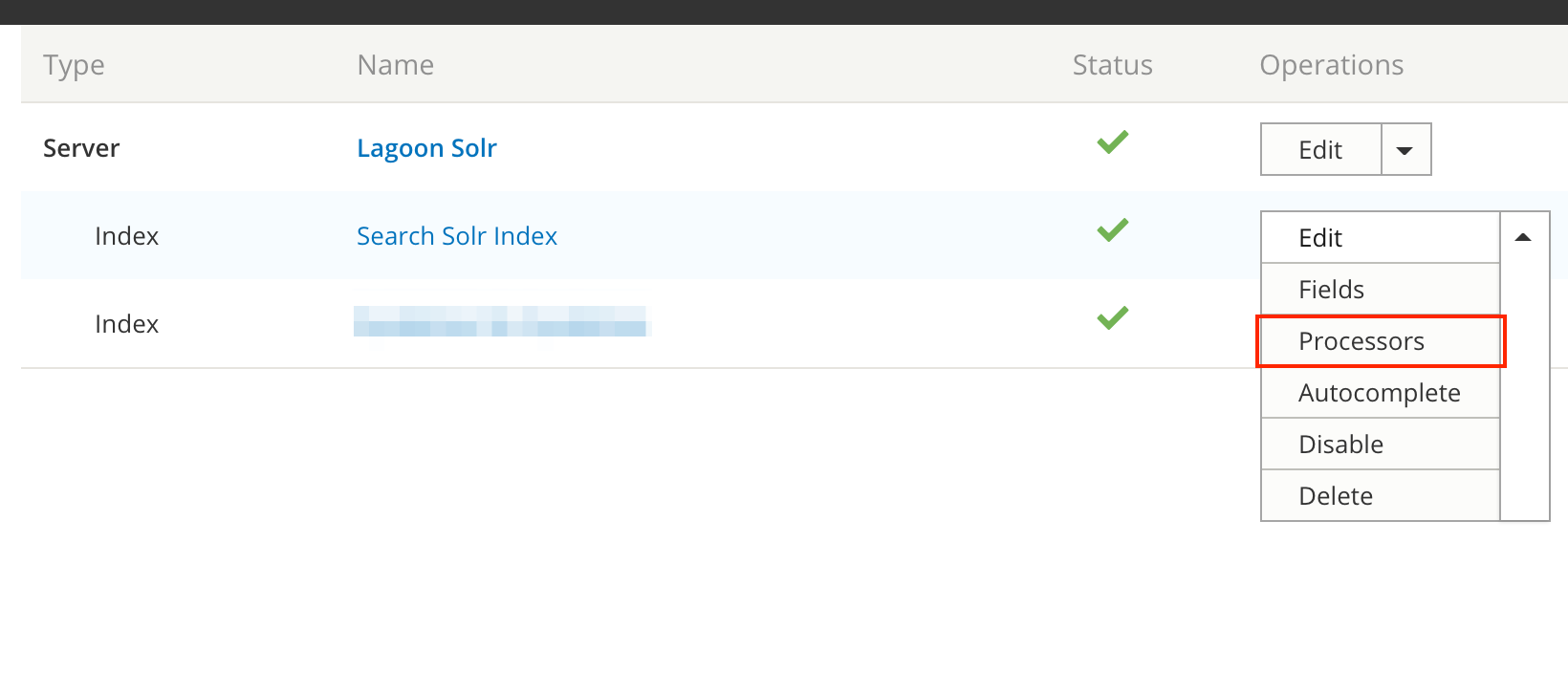

In order to enable this feature, you need to edit the Processors for a given index:

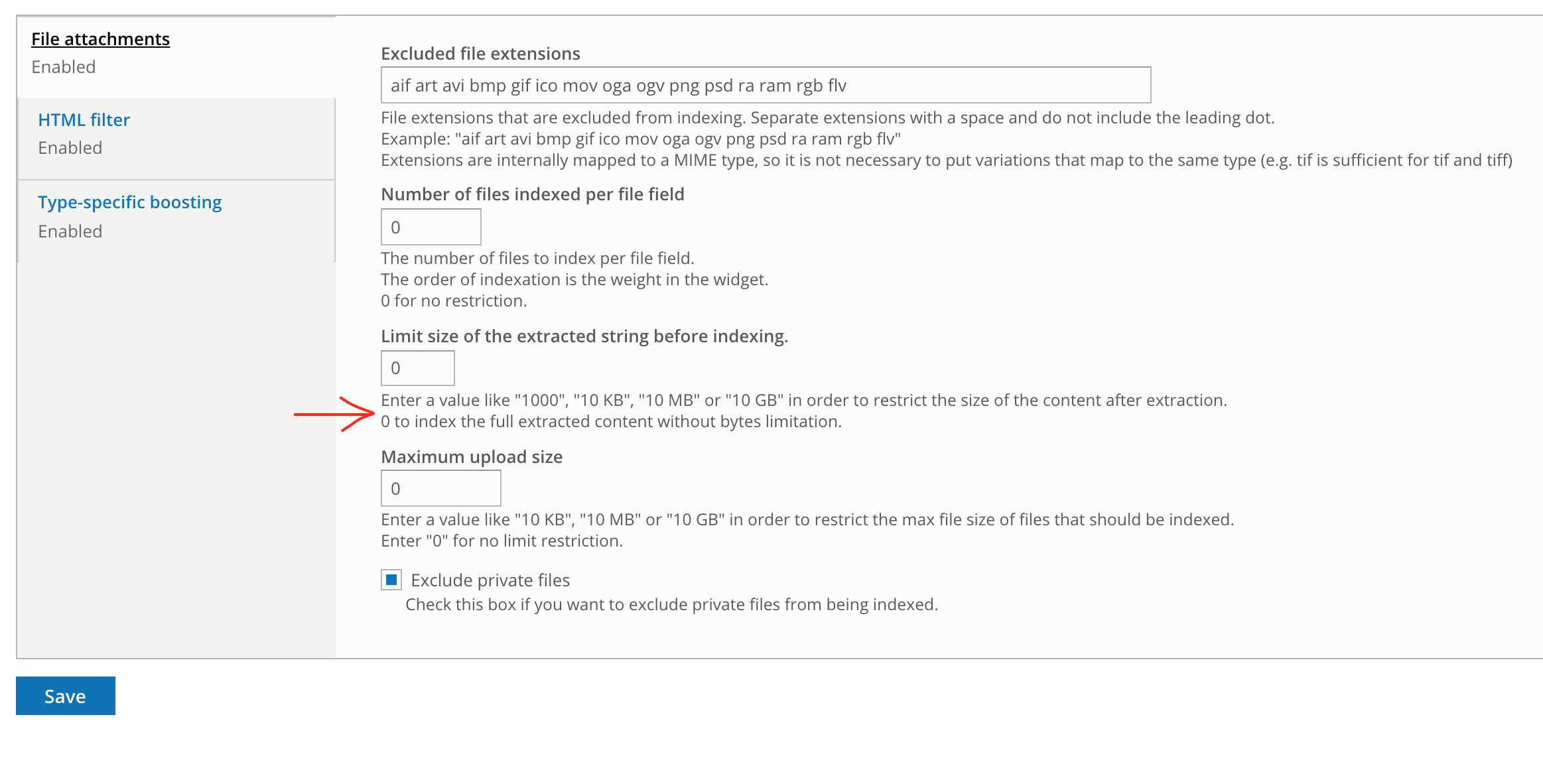

Inside this page, is a configuration form that allows you to set a max limit for the amount stored in the database.

After setting the size to 100 KB which seems like a reasonable number, and then re-indexing the appropriate index, you see the results

SELECT name, length(value) as size FROM key_value ORDER BY size DESC limit 5;

+--------------------------------+--------+

| name | size |

+--------------------------------+--------+

| node.field_storage_definitions | 116308 |

| search_api_attachments:831 | 102412 |

| search_api_attachments:1536 | 102412 |

| search_api_attachments:1571 | 102412 |

| search_api_attachments:1576 | 102412 |

+--------------------------------+--------+So this will mean that the database is a lot smaller, allowing faster database backups, restores, and rollbacks. It also will save a lot of issues around forever tuning max_allowed_packet.

In the hopes that the module maintainers make a more sensible default limit I have also raised this issue to get a default value set. Having any size limit would be better than having no limit.

It is also worth noting that this key_value storage is actually a caching system for search_api_attachments, and that this table will be used, even if your only search servers are external to Drupal (e.g. Solr), and you make no use of DB searching.