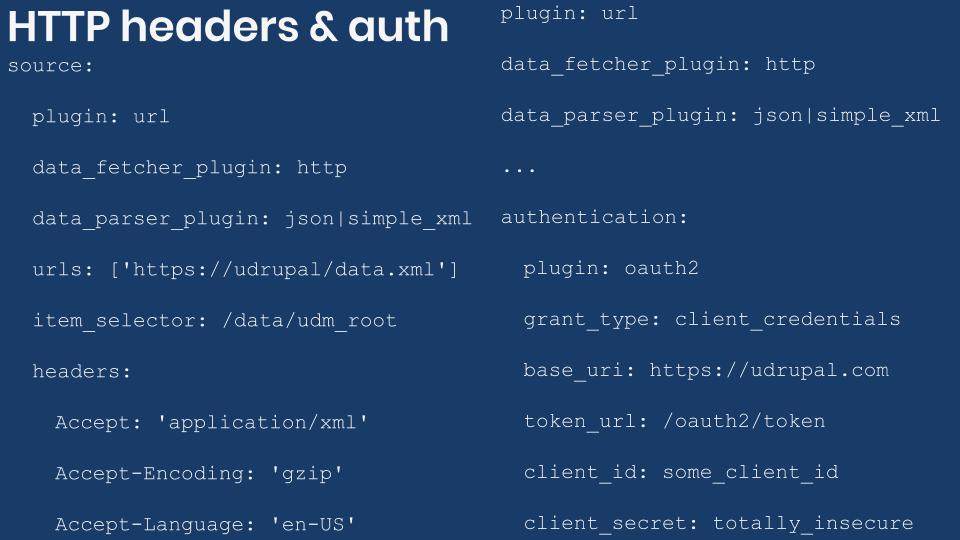

In the previous two blog posts, we learned to migrate data from JSON and XML files. We presented to configure the migrations to fetch remote files. In today's blog post, we will learn how to add HTTP request headers and authentication to the request. . For HTTP authentication, you need to choose among three options: Basic, Digest, and OAuth2. To provide this functionality, the Migrate API leverages the Guzzle HTTP Client library. Usage requirements and limitations will be presented. Let's begin.

Migrate Plus architecture for remote data fetching

The Migrate Plus module provides an extensible architecture for importing remote files. It makes use of different plugin types to fetch file, add HTTP authentication to the request, and parse the response. The following is an overview of the different plugins and how they work together to allow code and configuration reuse.

Source plugin

The url source plugin is at the core of the implementation. Its purpose is to retrieve data from a list of URLs. Ingrained in the system is the goal to separate the file fetching from the file parsing. The url plugin will delegate both tasks to other plugin types provided by Migrate Plus.

Data fetcher plugins

For file fetching, you have two options. A general-purpose file fetcher for getting files from the local file system or via stream wrappers. This plugin has been explained in detail on the posts about JSON and XML migrations. Because it supports stream wrapper, this plugin is very useful to fetch files from different locations and over different protocols. But it has two major downsides. First, it does not allow setting custom HTTP headers nor authentication parameters. Second, this fetcher is completely ignored if used with the xml or soap data parser (see below).

The second fetcher plugin is http. Under the hood, it uses the Guzzle HTTP Client library. This plugin allows you to define a headers configuration. You can set it to a list of HTTP headers to send along with the request. It also allows you to use authentication plugins (see below). The downside is that you cannot use stream wrappers. Only protocols supported by curl can be used: http, https, ftp, ftps, sftp, etc.

Data parsers plugins

Data parsers are responsible for processing the files considering their type: JSON, XML, or SOAP. These plugins let you select a subtree within the file hierarchy that contains the elements to be imported. Each record might contain more data than what you need for the migration. So, you make a second selection to manually indicate which elements will be made available to the migration. Migrate plus provides four data parses, but only two use the data fetcher plugins. Here is a summary:

jsoncan use any of the data fetchers. Offers an extra configuration option calledinclude_raw_data. When set to true, in addition to all thefieldsmanually defined, a new one is attached to the source with the nameraw. This contains a copy of the full object currently being processed.simple_xmlcan use any data fetcher. It uses the SimpleXML class.xmldoes not use any of the data fetchers. It uses the XMLReader class to directly fetch the file. Therefore, it is not possible to set HTTP headers or authentication.xmldoes not use any data fetcher. It uses the SoapClient class to directly fetch the file. Therefore, it is not possible to set HTTP headers or authentication.

The difference between xml and simple_xml were presented in the previous article.

Authentication plugins

These plugins add authentication headers to the request. If correct, you could fetch data from protected resources. They work exclusively with the http data fetcher. Therefore, you can use them only with json and simple_xml data parsers. To do that, you set an authentication configuration whose value can be one of the following:

basicfor HTTP Basic authentication.digestfor HTTP Digest authentication.oauth2for OAuth2 authentication over HTTP.

Below are examples for JSON and XML imports with HTTP headers and authentication configured. The code snippets do not contain real migrations. You can also find them in the ud_migrations_http_headers_authentication directory of the demo repository https://github.com/dinarcon/ud_migrations.

Important: The examples are shown for reference only. Do not store any sensitive data in plain text or commit it to the repository.

JSON and XML Drupal migrations with HTTP request headers and Basic authentication.

source:

plugin: url

data_fetcher_plugin: http

# Choose one data parser.

data_parser_plugin: json|simple_xml

urls:

- https://understanddrupal.com/files/data.json

item_selector: /data/udm_root

# This configuration is provided by the http data fetcher plugin.

# Do not disclose any sensitive information in the headers.

headers:

Accept-Encoding: 'gzip, deflate, br'

Accept-Language: 'en-US,en;q=0.5'

Custom-Key: 'understand'

Arbitrary-Header: 'drupal'

# This configuration is provided by the basic authentication plugin.

# Credentials should never be saved in plain text nor committed to the repo.

autorization:

plugin: basic

username: totally

password: insecure

fields:

- name: src_unique_id

label: 'Unique ID'

selector: unique_id

- name: src_title

label: 'Title'

selector: title

ids:

src_unique_id:

type: integer

process:

title: src_title

destination:

plugin: 'entity:node'

default_bundle: pageJSON and XML Drupal migrations with HTTP request headers and Digest authentication.

source:

plugin: url

data_fetcher_plugin: http

# Choose one data parser.

data_parser_plugin: json|simple_xml

urls:

- https://understanddrupal.com/files/data.json

item_selector: /data/udm_root

# This configuration is provided by the http data fetcher plugin.

# Do not disclose any sensitive information in the headers.

headers:

Accept: 'application/json; charset=utf-8'

Accept-Encoding: 'gzip, deflate, br'

Accept-Language: 'en-US,en;q=0.5'

Custom-Key: 'understand'

Arbitrary-Header: 'drupal'

# This configuration is provided by the digest authentication plugin.

# Credentials should never be saved in plain text nor committed to the repo.

autorization:

plugin: digest

username: totally

password: insecure

fields:

- name: src_unique_id

label: 'Unique ID'

selector: unique_id

- name: src_title

label: 'Title'

selector: title

ids:

src_unique_id:

type: integer

process:

title: src_title

destination:

plugin: 'entity:node'

default_bundle: pageJSON and XML Drupal migrations with HTTP request headers and OAuth2 authentication.

source:

plugin: url

data_fetcher_plugin: http

# Choose one data parser.

data_parser_plugin: json|simple_xml

urls:

- https://understanddrupal.com/files/data.json

item_selector: /data/udm_root

# This configuration is provided by the http data fetcher plugin.

# Do not disclose any sensitive information in the headers.

headers:

Accept: 'application/json; charset=utf-8'

Accept-Encoding: 'gzip, deflate, br'

Accept-Language: 'en-US,en;q=0.5'

Custom-Key: 'understand'

Arbitrary-Header: 'drupal'

# This configuration is provided by the oauth2 authentication plugin.

# Credentials should never be saved in plain text nor committed to the repo.

autorization:

plugin: oauth2

grant_type: client_credentials

base_uri: https://understanddrupal.com

token_url: /oauth2/token

client_id: some_client_id

client_secret: totally_insecure_secret

fields:

- name: src_unique_id

label: 'Unique ID'

selector: unique_id

- name: src_title

label: 'Title'

selector: title

ids:

src_unique_id:

type: integer

process:

title: src_title

destination:

plugin: 'entity:node'

default_bundle: pageWhat did you learn in today’s blog post? Did you know the configuration names for adding HTTP request headers and authentication to your JSON and XML requests? Did you know that this was limited to the parsers that make use of the http fetcher? Please share your answers in the comments. Also, I would be grateful if you shared this blog post with others.

This blog post series, cross-posted at UnderstandDrupal.com as well as here on Agaric.coop, is made possible thanks to these generous sponsors: Drupalize.me by Osio Labs has online tutorials about migrations, among other topics, and Agaric provides migration trainings, among other services. Contact Understand Drupal if your organization would like to support this documentation project, whether it is the migration series or other topics.